The international standards for accrediting laboratory’s technical competence has evolved over the past 30 over years, started from ISO Guide 25: 1982 to ISO Guide 25:1990, to ISO 17025:1999, to ISO 17025:2005 and now to the final draft international standard FDIS 17025:2017, which is due to be published before the end of this year to replace the 2005 version. We do not anticipate much changes to the contents other than any editorial amendments.

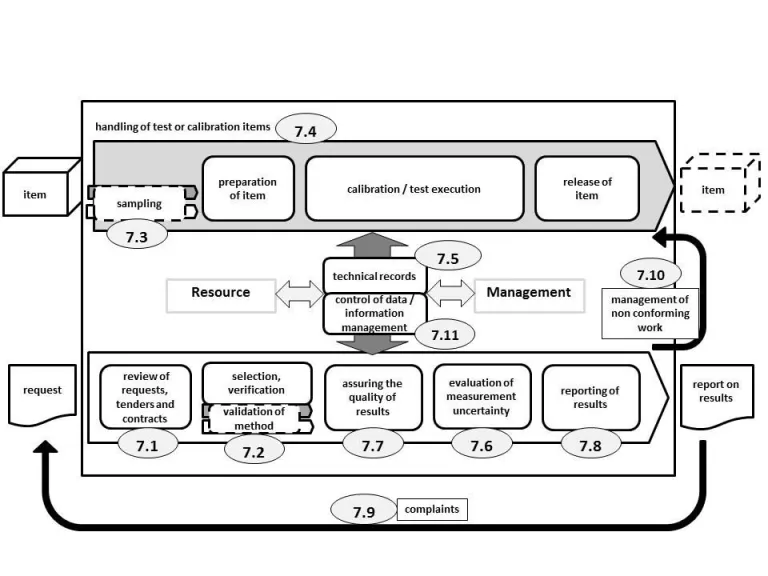

The new draft standard aims to align its structure and contents with other recently revised ISO standards, and the ISO 9001:2015 in particular. It is reinforcing a process-based model and focuses on outcomes rather than prescriptive requirements such as eliminating familiar terms like quality manual, quality manager, etc. and giving less description on other documentation. It attempts to introduce more flexibility for laboratory operation.

Although many requirements remain unchanged but appear in different places of the document, it has added some new concepts such as:

measurement uncertainty.

The purpose of introducing sampling as another activity is understandable, as we know that the reliability of test results is hanged on how representative the sample drawn from the field is. The saying test result is no better than the sample that it is based upon” is very true indeed.

If an accredited laboratory’s routine activity is also involved in the field sampling before carrying out laboratory analysis on the sample(s) drawn, the laboratory must show evidence of a robust sampling plan to start with, and to evaluate the associated sampling uncertainty.

It is reckoned however that in the process of carrying out analysis, the laboratory has to carry out sub-sampling of the sample received and this is to be part of the SOP which must devote a section on how to sub-sample it. If the sample received is not homogeneous, a consideration of sampling uncertainty is to be taken into account.

Although FDIS states that when evaluating the measurement uncertainty (MU), all components which are of significance in the given situation shall be identified and taken into account using appropriate methods of analysis, its Clause 7.6.3 Note 2 further states that “for a particular method where the measurement uncertainty of the results has been established and verified, there is no need to evaluate measurement uncertainty for each result if it can demonstrate that the identified critical influencing factors are under control”.

To me, it means that all identified critical uncertainty influencing factors must be continually monitored. This will have a pressured work load for the laboratory concerned to keep track with many contributing components over time if the GUM method is used to evaluate its MU.

The main advantage of the top down MU evaluation approach based on holistic method performance using the daily routine quality control data, such as intermediate precision and bias estimation is also appreciated as stated in Clause 7.6.3. Its Note 3 refers to the ISO 21748 which uses accuracy, precision and trueness as the budgets for evaluation of MU, as an information reference.

Secondly, this clause in the FDIS suggests that once you have established an uncertainty of a result by the test method, you can estimate the MU of all test results in a predefined range through the use of relative uncertainty calculation.

An example of linear regression model after data transformation

Many course participants of non-statistics background always find the word “estimation” in statistics rather abstract and difficult to apprehend. To overcome this, one can get a clear picture with the following explanations.

In carrying out statistical analysis, we must appreciate an important point that we are always trying to understand the characteristics or features of a larger phenomenon (called population) from the data analysis of samples collected from this population.

Then let’s differentiate the meanings of the words “parameter” and “statistic”.

A parameter is a statistical constant or number describing a feature of the entire phenomenon or population, such as population mean m, or population standard deviation σ, whilst a statistic is any summary number that describes the sample such as sample standard deviation s.

One of the major applications used by statisticians is estimating population parameter from sample statistics. For example, sample means are used to estimate population means, sample proportions to estimate population proportions.

In short, estimation refers to the process by which one makes inferences about a population, based on information obtained from one or more samples. It is basically the process of finding the values of the parameters that make the statistical model fit the data the best.

In fact, estimation is one of the two common forms of statistical inference. Another one is the null hypothesis tests of significance, including the analysis of variance ANOVA.

In fact, estimation is one of the two common forms of statistical inference. Another one is the null hypothesis tests of significance, including the analysis of variance ANOVA.

Point estimate. A point estimate of a population parameter is a single value of a statistic, such as the sample mean is a point estimate of the population mean.

Interval estimate. An interval estimate is defined by a range of two numbers, between which a population parameter is said to likely lie upon with certain degree of confidence. For example, the expression X + U or –U < X < +U gives the range of uncertainty estimate of the population mean.

It is to be noted that point estimates and parameters represent fundamentally different things. For example:

.webp)

Linear calibration curve – two common mistakes

Generally speaking, linear regression is used to establish or confirm a relationship between two variables. In analytical chemistry, it is commonly used in the construction of calibration functions required for techniques such as GC, HPLC, AAS, UV-Visible spectrometry, etc., where a linear relationship is expected between the instrument response (dependent variable) and the concentration of the analyte of interest.

The word ‘dependent variable’ is used for the instrument response because the value of the response is dependent on the value of concentration. The dependent variable is conventionally plotted on the y-axis of the graph (scatter plot) and the known analyte concentration (independent variable) on x-axis, to see whether a relationship exists between these two variables.

In chemical analysis, a confirmation of such relationship between these two variables is essential and this can be establish in terms of an equation. The other aspects of the calibration can then be proceeded.

The general equation which describes a fitted straight line can be written as:

y = a + bx

where b is the gradient of the line and a, its intercept with the y-axis. The least-squares linear regression method is normally used to establish the values of a and b. The ‘best fit’ line obtained from the squares linear regression is the line which minimizes the sum of the squared differences between the observed (or experimental) and line-fitted values for y.

The signed difference between an observed value (y) and the fitted value (ŷ) is known as a residual. The most common form of regression is of y on x. This comes with an important assumption, i.e. the x values are known exactly without uncertainty and the only error occurs in the measurement of y.

Two mistakes are so common in routine application of linear regression that it is worth describing them so that they can be well avoided:

Some instrument software allows a regression to be forced through zero (for example, by specifying removal of the intercept or ticking a “Constant is ‘zero’ option”).

This is valid only with good evidence to support its use, for example, if it has been previously shown that y-the intercept is not significant after statistical analysis. Otherwise, interpolated values at the ends of the calibration range will be incorrect. It can be very serious near zero.

Sometimes it is argued that the point (x = 0, y = 0) should be included in the regression, usually on the grounds that y = 0 is an expected response at x = 0. This is actually a bad practice and not allowed at all. It seems that we simply cook up the data.

Adding an arbitrary point at (0,0) will cause the fitted line to be more closer to (0,0), making the line fit the data more poorly near zero and also making it more likely that a real non-zero intercept will go undetected (because the calculated y-intercept will be smaller).

The only circumstance in which a point (0,0) can be validly be added to the regression data set is when a standard at zero concentration has been included and the observed response is either zero or is too small to detect and can reasonably be interpreted as zero.

Eurachem/CITAC Guide (2007) “Measurement Uncertainty arising from Sampling” provides examples on estimating sampling uncertainty. The Example A1 on Nitrate in glasshouse grown lettuce shows a summary of the classical ANOVA results on the duplicate method in the MU estimation without detailed calculations.

The 2007 NORDTEST Technical Report TR604 “Uncertainty from Sampling” gives examples on how to use relative range statistics to evaluate the double split design method which is similar to the duplicate method suggested in Eurachem.

We have used an Excel spreadsheet to verify the Eurachem’s results using the NORDTEST approach and found them satisfactory. The Two-Way ANOVA by the Excel Analytical Tool also shows similar results, but we have to combine the sum of squares of between-duplicate samples and sum of squares of interaction.

There is a growing interest in sampling and sampling uncertainty amongst laboratory analysts. This is mainly because the newly revised ISO/IEC 17025 accreditation standards to be implemented soon has added in new requirements for sampling and estimating its uncertainty, as the standard reckons that the test result is as good as the sample that is based on, and hence the importance of representative sampling cannot be over emphasized.

Like measurement uncertainty, appropriate statistical methods involving the analysis of variance (frequently abbreviated to ANOVA) have to be applied to estimate the sampling uncertainty. Strictly speaking, the uncertainty of a measurement result has two contributing components, i.e. sampling uncertainty and analysis uncertainty. We have been long ignoring this important contributor for all these years.

ANOVA indeed is a very powerful statistical technique which can be used to separate and estimate the different causes of variation.

It is simple to compare two mean values obtained from two samples upon testing to see whether they differ significantly by a Student’s t-test. But in analytical work, we are often confronted with more than two means for comparison. For example, we may wish to compare the mean concentrations of protein in a sample solution stored under different temperature and holding time; we may also want to compare the concentration of an analyte by several test methods.

In the above examples, we have two possible sources of variation. The first, which is always present, is due to the inherent random error in measurement. This within-sample variation can be estimated through series of repeated testing.

The second possible source of variation is due to what is known as controlled or fixed-effect and random-fixed factors: in the above example on protein analysis, the controlled factors are respectively the temperature, holding time and the method of analysis used for comparing test results. ANOVA then statistically analyzes the between-sample variation.

If there is one factor, either controlled or random, the type of statistical analysis is known as one-way ANOVA. When there are two or more factors involved, there is a possibility of interaction between variables. In this case, we conduct two-way ANOVA or multi-way ANOVA.

On this blog site, several short articles on ANOVA have been previously presented. Valuable comments are always welcome.

../assets/uploads/2017/04/04/anova-variance-testing-an-important-statistical-tool-to-know/

../assets/uploads/2017/01/analysis-of-variance-anova-revisited.pdf

../assets/uploads/2016/10/the-arithmetic-of-anova-calculations.pdf

../assets/uploads/2017/01/how-to-interpret-an-anova-table.pdf