Upon requests, I tabulate the differences and advantages / disadvantages of the two broad approaches in measurement uncertainty (MU) evaluation processes for easier appreciation.

| GUM (bottom up) approach | Top down approaches |

| Component-by-component using Gauss’ error propagation law for uncorrelated errors | Component-by-component using Gauss’ error propagation law for uncorrelated errors |

| Which components? | Which components? |

| Studying uncertainty contributions in each step of test method as much as possible | Using repeatability, reproducibility and trueness of test method, according to basic principle: accuracy = trueness (estimates of bias) + precision (estimates of random variability) |

| “Modeling approach” or “bottom up approach”, based on a comprehensive mathematical model of the measurement procedure, evaluating individual uncertainty contribution as dedicated input quantities | “Empirical approach” or “top up approach”, based on whole method performance to comprise the effects from as many relevant uncertainty sources as possible using the method bias and precision data. Such approaches are fully in compliant with the GUM, provided that the GUM principles are observed. |

| Acknowledged as the master document on the subject of measurement uncertainty | There are few alternative top down approaches, receiving greater attention by global testing community today |

| GUM classifies uncertainty components according to their method of determination into type A and type B: Type A – obtained by statistical analysis Type B – obtained by means other than statistical analysis, such as transforming a given uncertainty (e.g. CRM) or past experience |

Top down approaches consider mainly Type A data from own statistical analysis from within-lab method validation and inter-laboratory comparison studies |

| GUM assumes that systematic errors are either eliminated by technical means or corrected by calculation. | The top down approaches allow for method bias in uncertainty budget |

| In GUM, when calculating the combined standard uncertainty of the final test result, all uncertainty components are treated equally | The top down approach strategy combines the use of existing data from validation studies with the flexibility of additional model-based evaluation of individual residual effect uncertainty contributions. |

| Advantages: | Advantages: |

|

|

| Disadvantages: | Disadvantages: |

|

|

Top down approaches of Measurement Uncertainty

With regards to the subject of measurement uncertainty (MU) evaluation for chemical, microbiological and medical testing laboratories, I have been strongly advocating the holistic “top-down” approaches which consider the test method performance as a whole, making use of the routine quality control (QC) and method validation data, instead of the time consuming and clumsy step-by-step ISO GUM “bottom-up” approach which study the uncertainty contributions in each and every step of the laboratory analysis before summing up for the expanded uncertainty of the method.

Indeed, the top-down MU approach evaluates the overall variability of the analytical process in question.

Your ISO 17025 accredited laboratory should have a robust laboratory quality management control system in place, and carrying out regular laboratory control check sample analyses on each and every test method. This is your routine practice to ensure the reliability and accuracy of the test results reported to the end users.

Over time, you would have collected a wealth of such QC information data on the performance of all your test methods. They are sitting around in your LIMS or other computer system. Furthermore, you would have carried out your method validation or verification in your laboratory practice. What you really need to do is to re-organize all these QC data to your benefits with the help of a PC or laptop computer.

Of course, the top-down approaches like GUM have certain limitations, such as the possible mismatching of your sample matrices against the laboratory reference check samples, or relatively unstable reference materials. But these issues have been addressed by various top-down methods with an addition of the uncertainty contribution in actual sample analysis.

I list below some good references of the top-down approaches which are widely practiced by our US and EU peers. In Asia, I reckon China CNAS is probably the only national accreditation body leading the local testing industry in adopting these approaches. The other national accreditation bodies seem to be slow in promoting this noble subject for whatever reason.

An ideal experimentation is to have an experimental design with relatively few runs that could cover many factors affecting the desired result. But, it is impossible to accurately control and manipulate “too many” experimental factors at a time. Worse still, if we consider the number of levels for each factor, the number of experimental runs required will grow exponentially.

We can illustrate this point easily by the following discussion..

Assume that we have a 2-factor experiment with factor A having 3 levels (say, temperature factor with 30oC, 60oC, 90oC as levels), and factor B with 2 levels (say, catalyst, X and Y). Then, there are 3 x 2 = 6 possible combination of these two factors:

A(30) X

A(60) X

A(90) X

A(30) Y

A(60) Y

A(90) Y

Similarly, if there were a third experimental factor C with 4 levels (say, Pressure, 1 bar, 2 bar, 3 bar, 4 bar), then there would be 3 x 2 x 4 = 24 possible combinations:

A(30) X 1bar

A(60) X 1bar

A(90) X 1bar

A(60) Y 1bar

A(90) Y 1bar

A(30) X 2bar

A(60) X 2bar

A(90) X 2bar

A(60) Y 2bar

A(90) Y 2bar

A(30) X 3bar

A(60) X 3bar

A(90) X 3bar

A(60) Y 3bar

A(90) Y 3bar

Therefore, the general pattern is obvious: if we have m factors F1, F2, …, Fm with number of levels k1, k2, …, km each respectively, then there are k1 x k2 x … x km combined possible runs in total. Note that if the number of levels is the same m for each factor, then the product of this combination is just k x k x … x k (m times) or km.

Let’s see how serious exponential growth of the number of experimental runs is when the number of levels and factors increase:

Clearly, if we want to run all possible combination in an experimental study, a so-called full-factorial experiment, the number of runs gets too large to be practical for more than 4 or 5 factors with 2 levels, and much more so at 5 factors with 3 levels.

You may then ask: if I were to stick to 2 level designs, could I find ways to control the number of experimental runs?

The answer is yes provided you are able in some way to select a subset of the possibilities in some clever way so that “most” of the important information that could be obtained by running all the possible combinations of factor settings is still gained, but with a drastically reduced number of runs. One may do so by basing on his previous scientific knowledge, theoretical inferences and/or experience. But, this will not be easy.

Indeed, there is a way to do so, that is to follow the Pareto Principle on 20-80 rule which defines as below:

The Pareto Principle For processes with many possible causes of variations, adequately controlling just the few most important is all that is required to produce consistent results. Or, to express it more quantitatively (but as a rough approximation, only), controlling the vital 20% of the causes achieves 80% of the desired effects.

In other words, in order to get the most bang for your buck, you should focus on the important stuff and ignore the unimportant. Of course, the challenge is determining what is important and what is not.

If you can do so, you can now have a 2 level factorial design happily covering say 10 factors to be studied in 8 runs on 3 important factors only instead of 210 = 1024 runs!

Let’s look into some key operational characteristics that we think a good experiment should have in order to be effective. Among these, we would certainly include, but not limit to, subject matter expertise, “GLP” (Good Laboratory Practices), proper equipment maintenance and calibration, and so forth, none of which have anything to do with statistics.

But, there are other operational aspects of experimental practice that do intersect statistical design and analysis, and which, if not followed, can also lead to inconsistent, irreproducible results. Among these are randomization, blinding, proper replication, blocking and split plotting. We shall discuss them in due course.

To begin with, we need to define and understand some terminology. It should be emphasized that the DOE terminology is not uniform across disciplines and even across textbooks within a discipline. Common terms are described below.

In fact, randomization of the test runs cannot prevent instrument drift but is can help ensure that all levels of a factor have an equal chance of being affected by the drift. If so, differences in the response for pairs of factor levels will likely reflect the effects of the factor levels and not the effect of the drift.

Analysis of Variance Testing (ANOVA) is a way to test different means and their procedures against each other. Due to its design lending, itself to being used in multiple groups it is a popular method for testing. ANOVA tests both the null and alternative hypothesis in one test, just like a T-test (meant for only two means).

Since ANOVA testing is a larger scale T-test it is important to grasp what they do. A T-test simply put is a statistical analysis of two sample groups means. It looks at the two populations and determines if they are very different from each other. T-tests only work with small groups as well. Due to these constraints, it sometimes takes multiple T-tests in larger groups. While a T-test provides useful data that can help prove hypothesis it comes at a risk. Increasing the number of tests, increases calculations and time it takes to test a hypothesis. It also leaves room for more error. This risk leads way to larger format T-test in the ANOVA test.

ANOVA’s statistical model compares the means of more than two groups of data. Just like a T-test it analyzes the group’s procedures against each other. These procedures or variables are analyzed to gauge if they are significantly different. Due to it being designed for multiple groups it is preferred over conducting multiple 2 group T-tests. ANOVA tests produce less errors in these larger groups and usually takes a shorter amount of time to test. They are general tests though. ANOVA tests are meant to test general hypothesis, they do not work for a hypothesis with a narrow scope.

When an ANOVA test is conducted, there are values that can interpret whether a null hypothesis can be proven. The null hypothesis that is being tested is to prove whether or not the populations are equal. The populations are given different variables to see if they are equal. A significance level of 5% or lower shows a difference exists between the populations.

When you take the probability of a result from a p-test you get the p-value. If that value is less than the significance level, such as 0.05 for a 95% confidence, the value of the hypothesis can be proven true. That means there is a difference in populations based on variables. If the p-value is greater than the significance level the null hypothesis cannot be proven. This means that there is not enough evidence to suggest any difference.

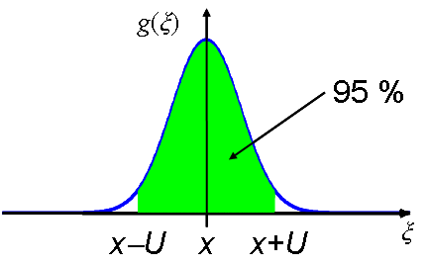

In larger group sets it is important to group data to determine its significance in rejecting a null hypothesis. Confidence intervals can be used to determine the difference between groups. A confidence interval is simply but the level of uncertainty with a population.

Since ANOVA tests can interpret a population’s means based on variances, it has many practical uses in a laboratory setting. There are many standards required for a laboratory to be verified to be in working order. An ANOVA test can help predict any issues that might arise from implementing certain procedures. It can make scientifically appropriate predications based on variables given. An ANOVA test can detect any deficiencies in a practice or procedure. Eliminating those practices or changing how they are implemented can contribute to the overall professionalism of the lab. From the changes made from an ANOVA test a lab can be more productive and efficient.

ANOVA is designed to compare multiple populations making it much more user friendly that using a Ttest in this setting. For instance, an analysis of a analyte that has been done for quite some time now has 4 different test methods. For a lab to determine which works best and will produce most acceptable results, multiple T-tests will have to be done to determine what the outcome would be.

Let us say a lab is asked to preform that analysis while they are trying to figure out the best way to do it. They will have to use the method they have been using whether or not it is the most efficient way to do it. If it is less efficient it might take too much time could turn to loss of business. Using the wrong procedure could also produce a faulty outcome.

Let us say a lab is asked to preform that analysis while they are trying to figure out the best way to do it. They will have to use the method they have been using whether or not it is the most efficient way to do it. If it is less efficient it might take too much time could turn to loss of business. Using the wrong procedure could also produce a faulty outcome.

Category:

What is Statistical Design of Experiments (DOE)?

You can collect scientific data from two very basically different ways: first being through passive observation such as making astronomical observations or weather measurements or earthquake seismometer readings, and second, through active experimentation.

The passively awaiting informative events or data are essential for determining how Nature works and serve both as raw material from which new models – that is, new theories – are built and as evidence for or against existing ones.

Active experimentation on the other hand, is powerful in bringing up a physical relationship between observed phenomena and the contributing causes. You are able to manipulate and evaluate the effects of possibly many different inputs, commonly referred to as experimental factors. But, experimentation can be expensive and time consuming, and experimental ‘noise’ can make it difficult of clearly understand and interpret what results.

The classical experimental approach is to study each experimental factor separately. This one-factor-at-a-time (OFAT) strategy is easy to handle and widely employed. But, we all know, this is not the most efficient way to approach an experimental problem when you have many variable factors to be considered.

Why?

Remember that the goal of any experiment is to obtain clear, reproducible results and establish general scientific validity. When you have a study which involves a large number of variables and each experiment on a variable while making the other variables constant can be time consuming. Moreover we cannot afford to run large numbers of trials due to budget constraints and other considerations.

Hence, it is important to develop a revolutionary approach with a statistical plan that guarantees experimenters or researchers an optimal research strategy. This is to help them to obtain better information faster and with less experimental effort. Design of experiments (DOE) therefore is a planned approach for determining cause and effect relationships. It can be applied to any process or experiment with measurable inputs and outputs.

To appreciate the power of DOE, let’s learn some of its historical background.

DOE was first developed for agricultural purposes. Agronomists were the first scientists to confront the problem of organizing their experiments to reduce the number of trials in the field. Their studies invariably include a large number of factors (parameters), such as soil composition, fertilizers’ effect, sunlight available, ambient temperature, wind exposure, rainfall rate, species studied, etc., and each experiment tends to last a long time before seeing and evaluating the results.

At the beginning of the 20th century, Fisher first proposed methods for organizing trials so that a combination of factors could be studied at the same time. These were the Latin Square, analysis of variance, etc. The ideas of Fisher were subsequently taken up by many well known agronomists such as Yates and Cochran and by statisticians such as Plackett and Burman, Youden, etc.

During World War II and thereafter, it became a tool for quality improvement, along with statistical process control (SPC). The concepts of DOE was used to develop powerful methods which were found useful amongst major industrial companies such as Du Pont de Nemours, ICI in England and TOTAL in France. They began using experimental designs in their laboratories to speedily improve their research activities on the products investigated.

Until 1980, DOE was mainly used in the process industries (i.e. chemical, food, pharmaceutical) mainly because of the ease with which the engineers could manipulate factors, such as time, temperature, pressure and flow rate. Then, stimulated by the tremendous success of Japanese electronics and automobiles, SPC and DOE underwent a renaissance. Today, the advent of personal computers further catalyzed the use of these numerically intense methods.