I have recently come across an interesting article published in 1944 by Harold Hotelling, a renown American mathematical statistician and an influential economic theorist, well known for his Hotelling’s law, Hotelling’s lemma and Hotelling’s rule in economics, as well as Hotelling’s T-squared distribution in statistics. The article is titled: Some improvements in weighing and other experimental techniques.

In an effort to seek improvement of physical and chemical investigations in terms of accuracy and costs through better designed experiments, based on the theory of statistical inference, Hotelling was interested in a published work of F. Yates in Complex Experiments, Jour. Roy. Stat. Soc., Supp., vol 2 (1935) pp 181-247, and suggested various improvements for a weighing process.

When we are given several objects to weigh, our normal practice is to weigh each and everyone of them in turn on a balance which has an error in terms of standard deviation, say, s.

Let’s assume we had two objects (A and B) for weighing and obtained weights ma and mb. So, we have the reported weights Ma and Mb as:

Ma = ma +/- s

Mb = mb +/- s

If ma and mb had values of 20 and 10 grams respectively, and s was 0.1 gram, then:

Ma = 20 +/- 0.1g

Mb = 10 +/- 0.1g

Hotelling noticed however that greater precision could be obtained for the same effort if both objects were included in each weighing. He carried out a first weighing with objects A and B on the same weighing pan, followed by weighing B only to obtain the difference in weights. He then deduced the masses of ma and mb as follows:

ma + mb = p1

ma – mb = p2

Hence, ma = (p1 + p2)/2

and mb = (p1 – p2)/2

By law of propagation of error, the total variance of n independent measurements is the sum of their variances without covariance factor to be considered. The variance of n times a measurement is n2 times the variance of that measurement. In this case, n = 2. Therefore,

Var(ma) = [Var(p1) + Var (p2)]/4 = [s2 + s2]/4 = s2/2

Var(mb) = [s2 + s2]/4 = s2/2

Hence, by this method, the variances of ma and mb were both equal to s2/2, half the value when the two objects were weighed separately.

The error of the results of these measures was therefore s/SQRT(2) or 0.7s and no longer s, so that:

Ma = 20 +/- 0.07g

Mb = 10 +/- 0.07g

An important inference from this illustration is that in designing experiments, it is best to include all variables, or all factors, in each trial for the same number of repeats in order to achieve improved precision.

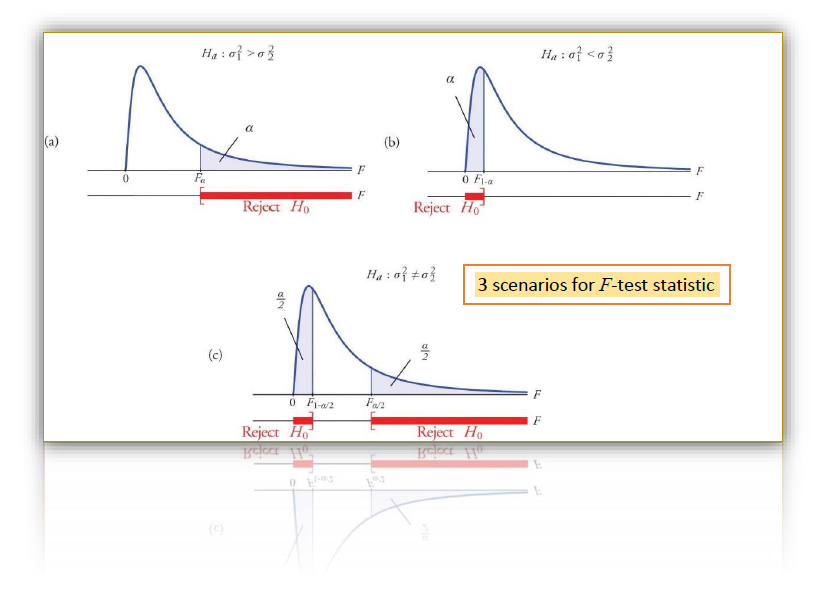

Before carrying out a statistic test to compare two sample means in a comparative study, we have to first test whether the sample variances are significantly different or not. The inferential statistic test used is called Fisher’s F ratio-test devised by Sir Ronald Fisher, a famous statistician. It is widely used as a test of statistical significance.

R and F-test

The Fisher F-test statistic is based on the ratio of two experimentally observed variance, which are squared standard deviations. Therefore, it is useful to test whether two standard deviations s1 and s2, calculated from two independent data sets are significantly different in terms of precision from each other. Read more …The variance ratio F-test statistic

R and F-test