Proficiency testing – what, why and how (Part II)

The Part 1 of this article series discussed the rationale to conduct proficiency testing (PT) programs and various proficiency assessment tools such as setting an assigned value and estimation of its standard deviation to reflect inter-laboratory variations. Let’s see how the scoring of PT results is made.

1. The z-Score

The most common scoring system is the z-score for a proficiency test result xi, calculated as:

where

xa is the assigned value and

sigmapt is the standard deviation for proficiency assessment.

Those readers who are familiar with the normal probability distribution function should appreciate the use of this z-score which is to standardize all randomly distributed data to a standard normal distribution N(0,1) with mean = 0 and sigma2 = 1.

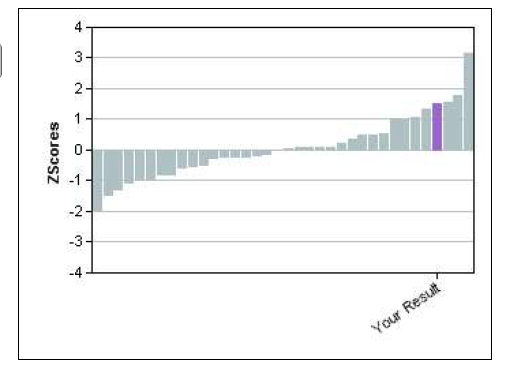

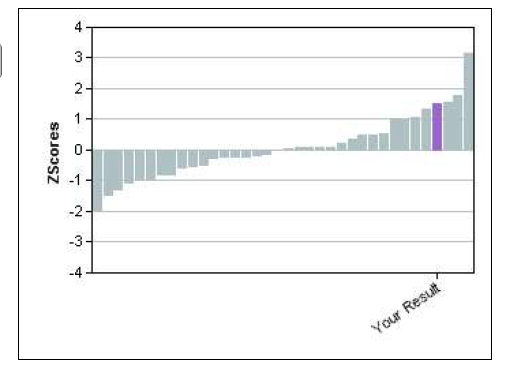

The conventional interpretation of z -scores is as follows:

Assuming all participants perform exactly in accordance with the performance requirements, then by the normal distribution, about 95% of values are then expected to be within two standard deviations of the mean value. In other words, there is only a 5% chance that a valid result would fall further than two standard deviation from the mean.

The probability of finding a valid result more than three standard deviations away from the mean is very low (approximately 0.3% for a normal distribution). Therefore, a score of | z | ≥ 3 is considered unsatisfactory performance. Also, participants should be advised to check their measurement procedures following warning signals in case they indicate an emerging or recurrent problem.

When there is concern about the uncertainty of an assigned value u(xa), (e.g. when u(xa) > 0.3spt, then the uncertainty can be taken into account by expanding the denominator of the performance score.

This statistic called a z’-score is calculated as follows:

The criteria of assessing the laboratory’s performance by the z’-scores are the same as those of z-scores.

An alternative scoring system is the Q-score where:

The above equation is essentially a relative measure of laboratory’s bias and does not take the target standard deviation into account. In the ideal situation, the distribution of Q-scores will be surrounding zero value when there is no significant bias in the participants’ measurement results.

Any interpretations of results are based on the set criteria of acceptability by the PT program organizer, such as setting acceptable percentage deviation from the target value.

Issues to be considered by the laboratory found to have unsatisfactory score:

If none of the above applies, the laboratory shall initiate a corrective action to investigate the cause of this unsatisfactory result, and implement and document any appropriate corrective actions taken.

There are many possible causes of unsatisfactory performance. Some of these are listed below:

In conclusion, participation in well run PT schemes let us gain information on how our measurement results compare with those of others, whether our own measurements improve or deteriorate with time, and how our own laboratory’s performance is compared with an external quality standard. In short, the aim of such schemes is the evaluation of the competence of analytical laboratories. PT program, indeed, is plays an important part in a laboratory’s QA/QC system.

We may want to make claims that our analytical results are reliable and accurate to the data users, but it is no better than if we can show proof to them the proficiency testing (PT) program reports that we have participated in testifying our good quality standing. So, what is proficiency testing?

The ISO 13528:2015 defines proficiency testing as “evaluation of participant performance against pre-established criteria by means of interlaboratory comparisons”.

Therefore, a proficiency testing program typically involves the simultaneous distribution of sufficiently homogeneous and stable test samples to laboratory participants. It is usually organized by an independent PT provider which is an organization that takes responsibility for all tasks in the development and operation of a proficiency testing scheme.

The participants are to analyze the samples using either a method of their choice or a specified standard method, and submit their results to the scheme organizers, who will then carry out statistical analysis of all the data and prepare a final PT report showing the ‘scores’ of all participants to allow them to judge their own performance in that particular round. Ideally, all participants should conduct the testing with the same standard method for more meaningful comparison of results.

The scores are reflections of the difference between the participants’ results and a target or assigned value in that round with a quality target, usually in the form of standard deviation. Such comparison is important as it gives an allowance for measurement error. The scoring system should set acceptability criteria to allow participants to evaluate their performance.

The primary aim of PT therefore is to allow participating laboratories to monitor and optimize the quality of its routine analytical measurements. It may be noted that it is concerned with the assessment of participant performance and as such does not specifically address bias or precision.

There are several international guidelines and standards for organizing a PT round and statistical analytical methods for these inter-laboratory comparison data. The well known ones are ISO 13528:2015 Statistical methods for use in proficiency testing by interlaboratory comparison, which provides statistical support for the implementation of ISO/IEC 17043:2010 Conformity assessment — General requirements for proficiency testing, which describes the general methods that are used in proficiency testing schemes.

How is a PT program organized?

There are two important steps in the organization of a PT scheme, that is specifying the assigned value for the samples to be analyzed, and secondly, setting the standard deviation for the proficiency assessment.

The organizer has to decide whether the assigned value and criterion for assessing deviations should be independent of participant results, or should be derived from the results submitted. in general, choosing assigned values and assessment criteria independently of participant results offers advantages.

Indeed, these two directly affect the scores that participants receive and therefore how they will interpret their performance in the scheme.

1. Setting the assigned value

The assigned value is the value attributed to a particular quantity being measured. It is accepted by the scheme organizer as having a suitable small uncertainty which is appropriate for a given purpose.

There are a number of approaches to obtain the assigned value:

The standard deviation for proficiency assessment is set by the scheme organizer. It is intended to represent the uncertainty regarding as fit for purpose for a particular type of analysis. Ideally, the basis for setting the standard deviation should remain the same over successive rounds of the PT program so that interpretation of performance scores is consistent over different rounds.

Also, due allowance for changes in performance at different analyte concentrations is usually made to make it easier for participants to monitor their performance over time.

There are a number of different approaches for performance evaluation.

This approach to defining the standard deviation for performance evaluation is based on the results from a previous reproducibility experiment via collaborative study by using the same analytical method, if any. In this case, we can look for the reproducibility and repeatability estimates from the study.

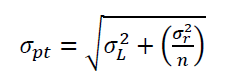

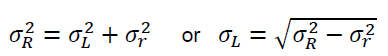

The standard deviation for proficiency assessment, spt, is given by

where

This is determined by experience with previous rounds of proficiency testing for the same analyte with comparable concentrations, and where participants use compatible measurement procedures. Such evaluations will be based on reasonable performance expectations.

The standard deviation is chosen to ensure that laboratories that obtain a satisfactory score are producing results that are fit for a particular purpose, such as being related to a legislative requirement. It can also be set to reflect the perceived performance of laboratories or to reflect the performance that the PT organizer and participants would like to be able to achieve.

With this approach, the standard deviation for proficiency assessment, spt, is calculated from the results of participants in the same round of the proficiency testing scheme.

This approach is relatively simple and has been conventionally accepted due to successful use in many situations. The data from the PT program are assessed using the robust mean of participant results as the assigned value.

When this approach is used it is usually most convenient to use a performance score such as the z score.

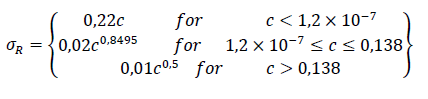

The Horwitz function is an empirical relationship based on statistics from a very large number of collaborative studies for chemical applications over an extended period of time. It describes how the reproducibility standard deviation varies with the analyte concentration level:

where c is the concentration of the chemical species to be determined in mass fraction 0 ≤ c ≤ 1. (e.g. 1 mg/kg = 10-6).

This approach however, does not reflect the true reproducibility of certain test materials, bur is useful when the number of participants is running short for any other more meaningful statistical comparison.

The next article will discuss the assessment scoring systems commonly adopted in proficiency testing programs.

To run a successful proficiency testing (PT) program, the importance of homogeneity and stability of PT samples prepared for an inter-laboratory comparison study cannot be over emphasized, as these two factors can adversely affect the evaluation of performance.

The PT provider must ensure that the measurand (i.e. targeted analyte) in the batch of samples is evenly distributed and is stable enough before laboratory analysis at the participant’s premises. Therefore an assessment for homogeneity and stability for a bulk preparation of PT items must be done prior to the conduct of the program.

Checks for sample stability are best carried out prior to circulation of PT items. The uncertainty contributors to be considered include the effects of transport conditions and any variation occurred during the PT program period.

A common model for testing stability in PT is to test a small sample of PT items before and after a PT round, to assure that no change occurred through the time of the round. One may check for any effect of transport conditions by additionally exposing the PT samples retained for the study duration to conditions representing transport conditions.

A simple procedure for a homogeneity check

The homogeneity check aims to obtain a sufficiently small repeatability standard deviation (sr) after replicated analyses. The general procedure is as follows:

The assigned value of PT program

A critical step in the organization of a proficiency testing (PT) scheme is specifying the assigned value for the participating laboratories. The purpose is to compare the deviation of participant’s reported results from the assigned value (i.e. measurement error) with a statistical scoring criterion which is used to decide whether or not the deviation represents significant cause for concern in its performance.

ISO 13528:2015 defines assigned value as “value attributed to a particular property of a proficiency test item”. It is a value attributed to a particular quantity being measured. Such an assigned value will have a suitably small uncertainty which is appropriate for this interlaboratory comparison purpose.

Where do we obtain an assigned value?

A. Assigned value obtained by formulation

A specified known level or concentration of the target analyte is added accurately to a base material preferably containing no native analyte. The assigned value is then derived by calculating the analyte concentration from the masses of analyte used. By this way, the traceability of the assigned value can usually be established.

However, there may be no suitable base material (blank material) or well characterized base material available. Ensuring homogeneity in the prepared bulk material before distributing to the participants may also be a challenge. Furthermore, formulated samples may not be truly representative of test materials as the analyte may be in a different form from the less strongly bound to the matrix.

B. Assigned value is a certified reference value

In this case, the test material is a certified reference material (CRM) made by a reputable organization and the assigned value is therefore the certified value and its uncertainty are quoted on the CRM certificate. The limitations of using this assigned value are:

1. Generally the CRM can be expensive to provide every participant with a unit of such CRM

2. It is important to conceal the identity of commercial CRM from the participants as the testing outcome may be compromised by the participants

3. Some certified value uncertainty may be high.

C. Assigned value is a reference value

The assigned value is determined by a single expert laboratory using a suitable primary method of analysis (e.g., gravimetry, titrimetry, isotope dilution mass spectrometry, etc.) or a fully validated test method which has been calibrated with a closely matched CRM.

D. Assigned value from consensus of expert laboratories

This assigned value is obtained from the results reported by a number of expert laboratories, with demonstrated proficiency in the measurements of interest, which analyze the material using suitable methods. However, it must be cautioned that there may be an unknown bias in the results produced by the expert laboratories.

E. Assigned value from consensus of PT scheme participants

This is the result from all the participants in the proficiency testing round. It is normally based on a robust estimate to minimize the effect of extreme values in the data set.

The quality of your analytical measurements and your laboratory’s technical competence can be enhanced if you participate in testing schemes satisfactory with a number of laboratories simultaneously. Such a scheme is known as proficiency testing (PT) scheme.

The primary aim of PT is to allow laboratories to monitor and optimize the quality of their routine analytical measurements.

A PT scheme is usually organized by an independent body, which can be a national standard body, a learned professional organization or even a business enterprise. Basically, in chemical analysis, aliquots from homogeneous and stable test materials are distributed to a number of laboratories for analysis to be carried out at a stated window of time. Each participant is given an unique identification code.

After the participants have analyzed the samples using either a test method of their choice or a stated standard method, the scheme organizer will carry out statistical analysis of all the data submitted and provide a performance report, detailing each participant’s statistical ‘score’ that allows them to judge their performance in that particular round of testing.

In other words, the participating laboratory can gain information on how their measurements compare with those of others, how their own measurements improve or deteriorate with time, and how their own measurements compare with an external quality standard.

There are a number of different scoring systems used in PT programs; the majority involve comparing the difference between the participant’s result (x) with a target or assigned value (xa) with a quality target, which is usually a standard deviation for proficiency assessment, denoted by xp. Each scoring system has acceptability criteria to allow participants to evaluate their performance.

Generally we do expect some divergent results to arise even between experienced, well equipped and well-staffed laboratories. If so, this PT scheme helps to highlight such alarming differences, and to suggest to these laboratories look into their own analytical process in order to improve the quality of their test results. Hopefully a better comparison is achieved in the next round of the PT testing.

Amusingly, there are reports that some participating laboratories have been caught to have colluded in reporting their test results to the scheme organizer, particularly when the PT scheme does not involve a large number of laboratories and some of these laboratory operators are known to each other, such as being subsidiary laboratories of an organization group. Such collusion act is undesirable as it defeats the noble purpose of carrying out the PT scheme and renders the outcome of this round of PT testing meaningless.

A simple explanation to this incident is that these laboratories are not confident of their own testing and need to compare results of others before submitting the results to the organizer. However, the outcome of a statistical graph may show a bunch of results grouped at one corner when these results are questionable, meaning they are significantly different from the assigned value of the test material analyzed.

Of late, some scheme organizers try to overcome this malpractice by preparing and sending out at least two labelled test samples with close but significantly different analyte concentrations (not duplicates) to the participants. The originality of these samples is only known to the organizer.

Actually we must not treat PT samples with extra care and attention, but run the PT samples like any other routine samples. It is quite common to see that a participant would repeat the analysis as many times as possible until no more sample left for future reference!

A good source of reference on statistical techniques applicable to a PT scheme is ISO 13528:2015 Statistical methods for use in proficiency testing by interlaboratory comparison